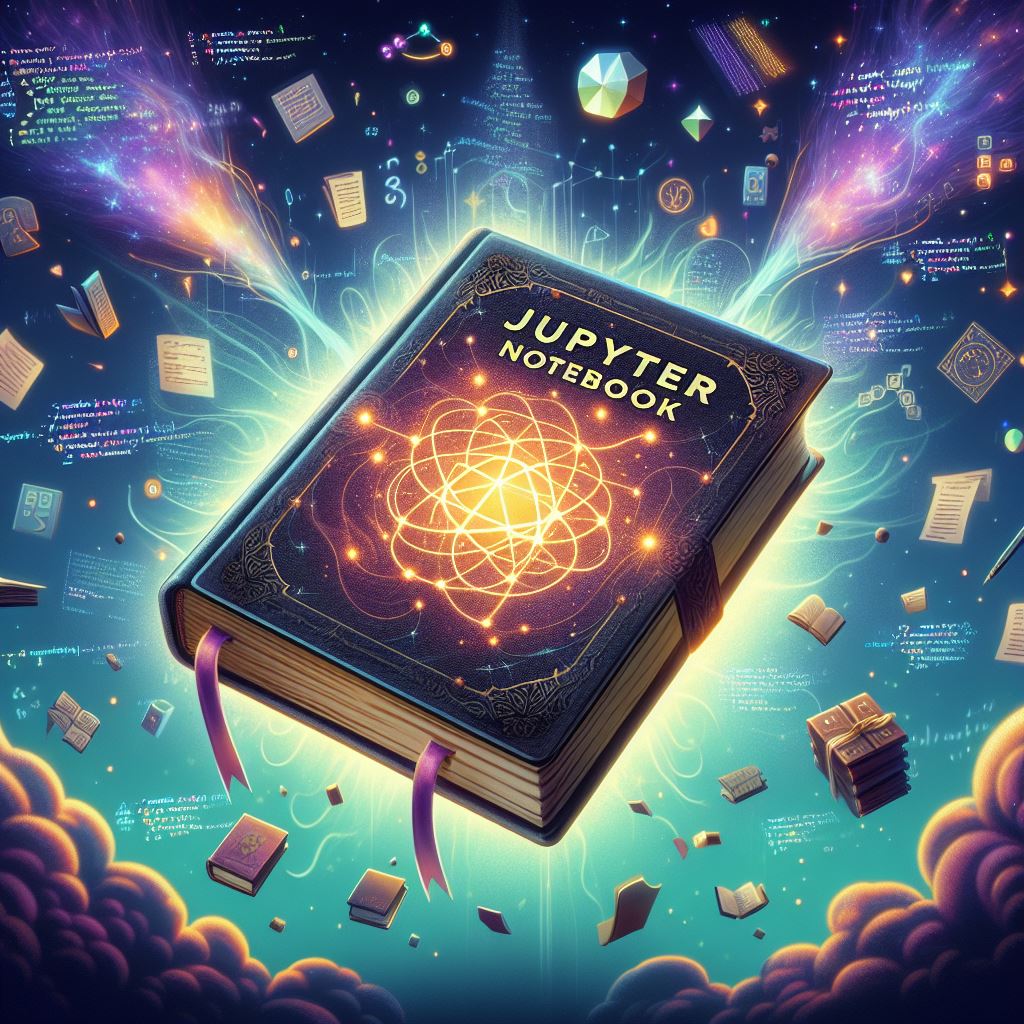

The Power of Jupyter Notebook You Need to Know

Unleashing the Power of Jupyter Notebooks on AWS

Unlocking the potential of programming through interactive notebooks has been a game-changer for developers and data scientists alike. Jupyter Notebooks, with their ability to seamlessly run code, retain results, and facilitate easy sharing, have become indispensable tools in the world of programming. In this section, we delve into the essence of Jupyter Notebooks, exploring their versatility and the unique advantages they offer for coding projects of all kinds.

The AWS Advantage

As we journey into the realm of cloud computing, Amazon Web Services (AWS) emerges as a key player, offering a robust infrastructure for hosting a myriad of applications. Here, we introduce the AWS ecosystem and highlight the benefits it brings to the table. From scalability to security, AWS sets the stage for our exploration of integrating Jupyter Notebooks into the cloud environment.

Setting Up Your AWS Environment

Building a solid foundation is crucial before venturing into the cloud. In this section, we guide you through the process of creating a custom AWS Virtual Private Cloud (VPC) from scratch. From configuring subnets to establishing internet gateways, we lay the groundwork for hosting Jupyter Notebooks securely within the AWS infrastructure.

Provisioning AWS EC2 Instances

With our AWS environment in place, it’s time to deploy EC2 instances to run our Jupyter Notebooks. We navigate through the intricacies of setting up Ubuntu servers and fine-tuning the configurations to optimize performance and security. By the end of this section, you’ll have a fully operational EC2 instance ready to host your coding projects.

Configuring Jupyter Notebooks on AWS

In this hands-on section, we delve into the nitty-gritty of configuring Jupyter Notebooks to run seamlessly on AWS EC2 instances. From installing and setting up nginx for web server capabilities to implementing supervisor for process control, we ensure a smooth and secure deployment of Jupyter in the cloud.

Running Jupyter Securely in the Cloud

Security is paramount in any computing environment, especially when operating in the cloud. Here, we address key considerations and best practices for securely running Jupyter Notebooks on AWS. From managing access controls to implementing encryption protocols, we fortify our setup to safeguard sensitive data and ensure a protected computing environment.

Real-world Applications and Use Cases

To bring theory into practice, we explore real-world applications and use cases where Jupyter Notebooks on AWS shine brightest. Whether it’s data analysis, machine learning experimentation, or collaborative coding projects, the versatility of Jupyter combined with the scalability of AWS opens doors to endless possibilities.

Advancing Your Jupyter Journey

As you embark on your journey with Jupyter Notebooks on AWS, there’s always room for growth and exploration. In this final section, we provide resources and guidance for furthering your skills and delving deeper into the world of cloud-based development. From advanced techniques to community-driven insights, the path to mastery awaits.

Conclusion and Next Steps

In the concluding section, we reflect on the transformative journey of learning and implementing AWS solutions with Jupyter Notebooks. We recap key learnings, celebrate accomplishments, and chart a course for future endeavors in the dynamic landscape of cloud computing and data science.

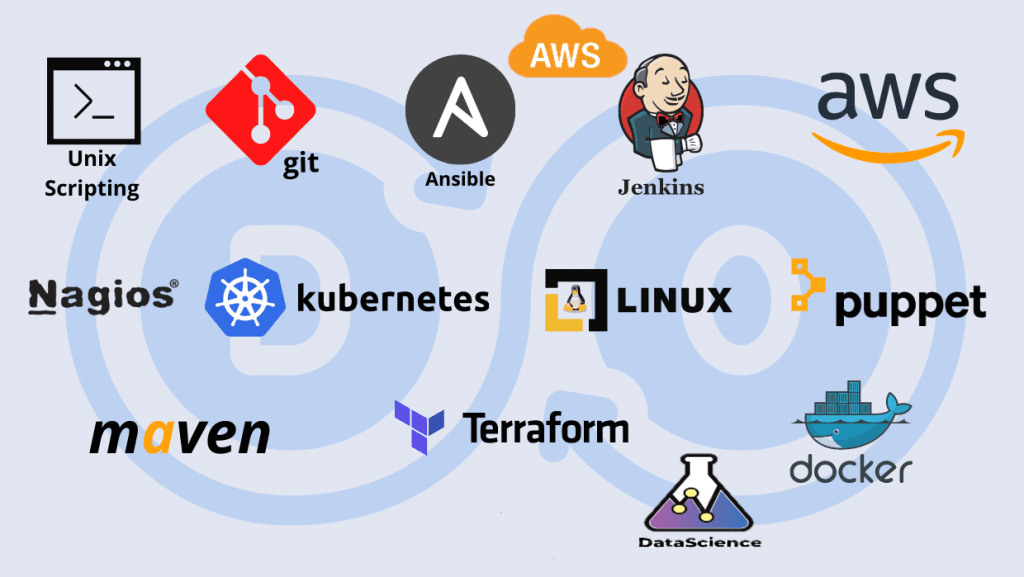

Virtualization 90 Minute Demonstration Crash Free udemy Cour

the secrets of virtualization in just 90 minutes with comprehensive course! practical skills real-world applications for VMware vSphere, Microsoft Hyper-V, AWS.