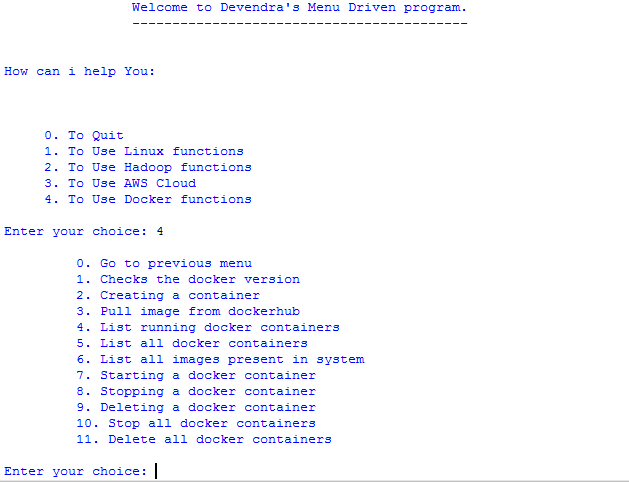

How Much Do Celebrities in India Pay in Taxes?

India’s Top Celebrity Taxpayers in 2024

In India, celebrities aren’t just known for their on-screen performances or chart-topping songs—they also contribute significantly to the nation’s economy. Paying taxes is one of the key ways these stars give back, demonstrating responsibility beyond their glamorous lives. Let’s take a look at the list of Top Celebrity Taxpayers in India for 2024, highlighting their contributions and impact.

1. Shah Rukh Khan – ₹92 Crore

The “King of Bollywood” isn’t just ruling the box office; he tops the taxpayer list with a whopping ₹92 crore. Known for his blockbuster movies and brand endorsements, Shah Rukh Khan’s contribution reflects his incredible earning power and commitment to the nation.

2. Salman Khan – ₹75 Crore

Salman Khan, a superstar with a massive fan following, paid ₹75 crore in taxes. Whether it’s through movies, TV shows like Bigg Boss, or his Being Human brand, Salman continues to maintain his position as one of Bollywood’s highest earners.

3. Amitabh Bachchan – ₹71 Crore

Even at 81, Amitabh Bachchan continues to inspire generations. Paying ₹71 crore in taxes, Big B remains one of the most active and successful actors in the country. His income comes from films, TV appearances, and numerous advertisements.

4. Ajay Devgn – ₹42 Crore

Ajay Devgn’s ₹42 crore tax contribution is no surprise, given his consistent hits and savvy investments in production and other businesses.

5. Ranbir Kapoor – ₹36 Crore

Ranbir Kapoor, riding high on his recent successes, contributed ₹36 crore in taxes. His earnings from films and endorsements place him among the top earners of 2024.

6. Hrithik Roshan – ₹28 Crore

Hrithik Roshan, known for his impeccable dance moves and blockbuster films, paid ₹28 crore in taxes this year.

7. Kapil Sharma – ₹26 Crore

Comedy king Kapil Sharma proves that humor pays well, contributing ₹26 crore in taxes. His popular TV show and live performances remain a significant source of income.

8. Kareena Kapoor Khan – ₹20 Crore

Kareena Kapoor Khan, one of Bollywood’s most iconic actresses, paid ₹20 crore. Her earnings come from films, brand endorsements, and other ventures.

9. Shahid Kapoor – ₹14 Crore

Shahid Kapoor, riding on the success of recent films, paid ₹14 crore in taxes.

10. Kiara Advani – ₹12 Crore

Kiara Advani, a rising star in Bollywood, rounds off the list with ₹12 crore. Her growing popularity and numerous projects contribute to her impressive earnings.

-

15 Best AI Tools for Developers in 2025 (Free & Paid)

15 Best AI Tools for Developers in 2025 (Free & Paid) The rise of artificial intelligence is transforming how developers write, debug, and optimize code. From automating repetitive tasks to predicting errors before they happen, AI tools are becoming indispensable for coders in 2025. Whether you’re a Python pro, a JavaScript enthusiast, or a full-stack…

-

Easy Ways to Prepare for AWS Questions in 2025

Mostly Asked AWS Interview Questions in 2025 Impact-Site-Verification: c45724be-8409-48bd-b93e-8026e81dc85aAmazon Web Services (AWS) has consistently remained at the forefront of cloud computing. With organizations migrating their infrastructures to the cloud, AWS-certified professionals are in high demand. Cracking an AWS interview in 2025 demands not just conceptual clarity but also hands-on familiarity with AWS services. In this…

Why It Matters

Celebrities play a significant role in contributing to India’s economy, not just through taxes but by generating employment and inspiring millions. Their tax payments are a reflection of their success and serve as a reminder that with great income comes great responsibility.

The Bigger Picture

India’s tax revenue supports infrastructure, healthcare, education, and welfare programs. Celebrities contributing such large amounts set an example for others, showing that paying taxes is both necessary and beneficial for the nation’s growth.

Final Thoughts

The 2024 list of top celebrity taxpayers highlights the impressive earnings and social responsibility of these stars. While they entertain us on-screen, their contributions off-screen make a significant difference. Their actions remind us that success is about more than personal gain—it’s also about giving back to society.