Magic of Caching Netflix uses To Hold Attention

How Caching Makes Netflix Faster and Easier

Netflix is synonymous with seamless streaming and personalized entertainment. Achieving this level of user satisfaction isn’t just about great content; it’s about delivering that content without delay. One of Netflix’s secret weapons in this endeavor is EVCache, a distributed in-memory key-value store that powers key functionalities across the platform.

Let’s dive into four major ways Netflix uses caching to enhance the user experience and keep us binge-watching for hours.

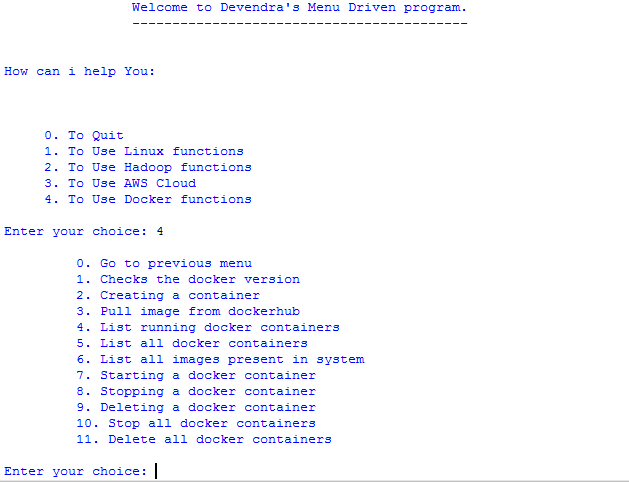

1. Look-aside Cache: Delivering Data at Lightning Speed

How It Works

A look-aside cache stores frequently accessed data, ensuring that critical information is readily available without overloading backend databases.

- When an application requests data, it first checks the EVCache.

- If the data is present (a cache hit), it is served instantly.

- If the data is absent (a cache miss), the application fetches it from a backend service, such as Cassandra.

- Once retrieved, the data is stored in EVCache for future use.

Why It Matters

- Reduced Latency: Quickly delivers user-specific data like recommendations or watch histories.

- Scalability: Reduces stress on backend services, enabling smoother operations at scale.

- Enhanced User Experience: Faster response times lead to happier users.

Example Use Case

When a user opens Netflix, their home page loads instantly with personalized recommendations and thumbnails, thanks to EVCache’s ability to serve pre-fetched data.

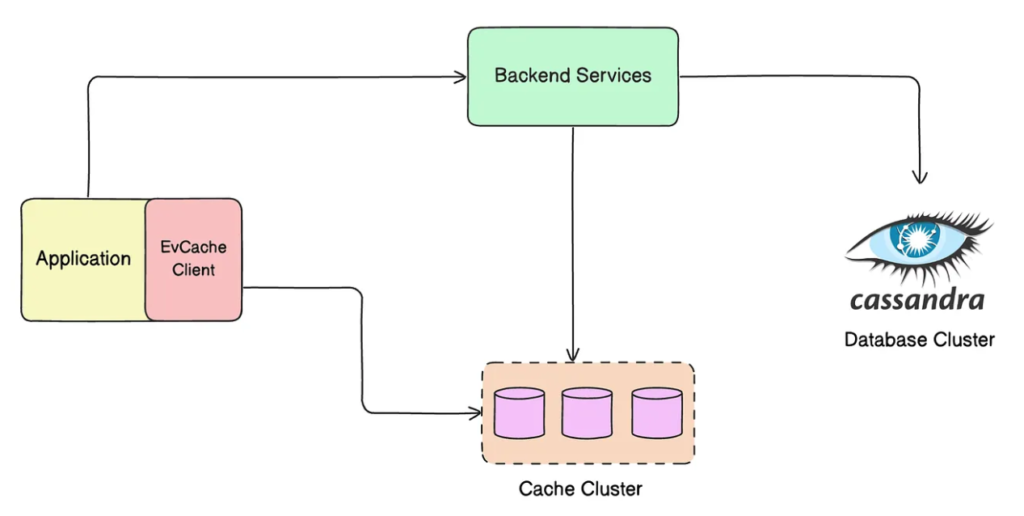

2. Transient Data Store: Managing Temporary Session Data

How It Works

EVCache acts as a transient data store for short-lived information, such as playback session details.

- Session information, including playback time and device data, is stored in EVCache when a user begins watching.

- As the session progresses, updates like pausing, rewinding, or skipping are also saved to the cache.

- At the end of the session, data may either be discarded or stored permanently for analytics.

Why It Matters

- Low-Latency Updates: Real-time session data allows for seamless playback and user interactions.

- Service Coordination: Ensures multiple services have access to up-to-date session data.

- Cost Efficiency: Optimized for temporary storage, reducing unnecessary resource usage.

Example Use Case

If a user pauses a movie on their smartphone and resumes on their smart TV, EVCache ensures that the playback position is updated and instantly available across devices.

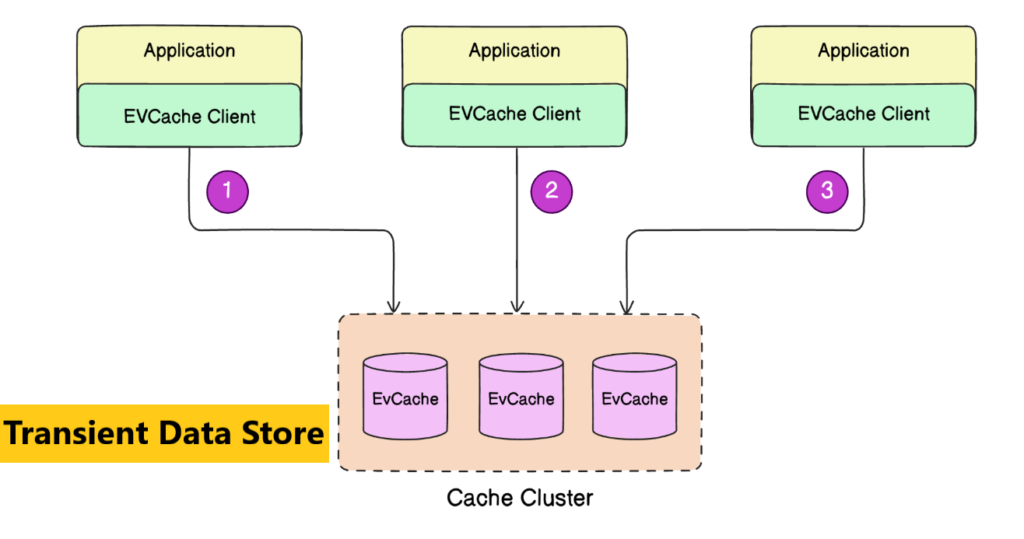

3. Primary Store: Precomputing for Personalized Experiences

How It Works

For some functionalities, such as generating personalized home pages, EVCache serves as a primary data store.

- Each night, large-scale compute systems analyze user preferences, watch history, and other data to precompute a personalized home page.

- This precomputed data, including thumbnails and recommendations, is stored in EVCache.

- When the user logs in, the pre-fetched home page data is served instantly.

Why It Matters

- Precomputed Efficiency: Reduces the need for on-the-fly computations during peak traffic.

- Scalability: Supports millions of unique user profiles globally.

- Instant Access: Guarantees quick loading times for home page content.

Example Use Case

When a user logs into Netflix, their personalized home page appears immediately, complete with curated categories and recommendations.

-

International students in Australia are facing significant adjustments to their post-study career plans

following recent shifts in the country’s Post-Study Work (PSW) visa regulations. These changes, aimed at recalibrating Australia’s skilled migration pathways, introduce new requirements and revised eligibility criteria that could profoundly impact graduates aspiring to gain work experience after completing their studies. As the dust settles on these policy updates, Kalinka Infotech emphasizes the critical need

-

Major shifts in global immigration policies are prompting international STEM graduates to reassess their post-study career trajectories.

Recent Post-Study Work Visa Updates across key study destinations are introducing both new challenges and strategic opportunities, particularly for those in high-demand fields such as information technology, engineering, and data science. This evolving landscape necessitates a proactive and informed approach from students and educational institutions alike, as nations refine their immigration frameworks to attract and

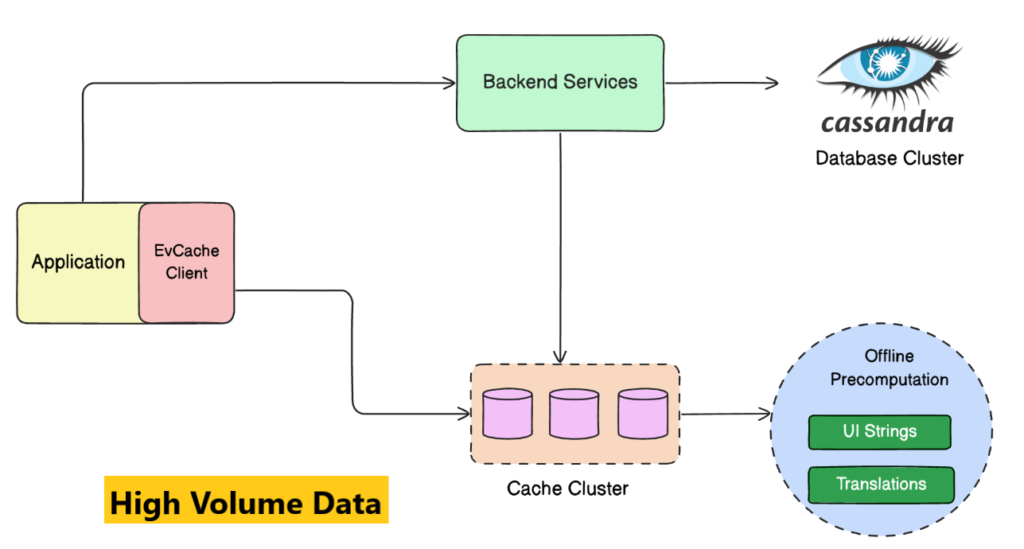

4. High-Volume Data: Ensuring Reliable Access

How It Works

High-demand elements like UI strings and translations are cached in EVCache to handle heavy traffic efficiently.

- Processes asynchronously compute and publish UI strings (e.g., titles, descriptions, menu text) to EVCache.

- These cached elements are accessed by the application as needed to deliver localized experiences.

Why It Matters

- Global Reach: Ensures that users in different regions receive localized content without delays.

- High Availability: Handles massive volumes of requests during peak times.

- Improved User Experience: Consistent, fast-loading interfaces create a seamless experience.

Example Use Case

When a user accesses Netflix, UI elements like navigation menus and localized movie titles are fetched instantly, ensuring the platform is ready to use without interruptions.

Key Benefits of Netflix’s Caching Strategy

| Feature | Impact |

|---|---|

| Reduced Latency | Faster data retrieval enhances the streaming experience. |

| Improved Scalability | Frees up backend resources for complex computations. |

| Localized Content | Ensures that users worldwide receive relevant, language-specific content. |

| Seamless Transitions | Enables cross-device synchronization for an uninterrupted user experience. |

Conclusion

Netflix’s use of caching through EVCache exemplifies how innovative technology can elevate the user experience. By reducing latency, improving scalability, and ensuring data availability, Netflix keeps us glued to our screens with minimal disruptions.

As we marvel at the smooth streaming experience, it’s clear that caching is not just a technical solution it’s a cornerstone of user satisfaction.